Dhruvesh Patel

dhruveshpate@umass.edu

🌟 I’m looking for internship opportunities for Summer 2024. Here is my CV. 🌟

I am currently a third-year Computer Science PhD student at UMass Amherst, where I have the privilege of working under the guidance of Prof. Andrew McCallum alongside some amazing colleagues at the Information Extraction and Synthesis Laboratory. Before embarking on my doctoral journey, I completed my undergraduate studies at IIT Madras. During my time there, I delved deep into Robotics research, mentored by Prof. Bandyopadhyay.

Outside of my academic pursuits, I’ve been fortunate to have worked with some amazing collaborators from the industry. I have worked as a research scientist inter at Meta Reality Labs and Abridge AI. Before beginning my master’s program at UMass, I worked for two years as a software engineer at MathWorks. I also dedicated a year to collaborating with Prof. Partha Talukdar on solving various NLP problems in the industry.

research

As someone who has a deep fascination for the abstract concepts of mathematics and a strong drive to create tangible impact in the world, I strive for a balanced approach in my research. My focus revolves around bridging the gap between abstract ideas and tangible applications of machine learning. This dual approach has led me to explore the applications of machine learning in robotics, NLP, and knowledge graphs, while also nurturing my passion for foundational aspects of machine learning such as representation learning. While most representation learning methods only focus on metric learning, my work on box embeddings aims to show that representation learning can also capture various other kinds of structures like algebraic and relational structure, thereby allowing models to perform compositional reasoning. I believe that learning objectives and uncertainty quantification methods based solely on probability are restrictive. As a result, I have explored energy models, where the objective is to use energy model as a learned loss function to train a feedforward prediction network. Various frameworks quantifying uncertainty have been proposed over the years. However, none of these frameworks are suited to measure the uncertainty in modern deep learning models like transformers. My current research focuses on creating novel methods to model uncertainty in transformer-based models. I am also interested in analyzing the limits of compositional generalization achievable through in-context learning with transformers, approaching it from the perspective of minimum description length and compression.

affiliations and internships

news

| Apr 1, 2024 | Language Guided Exploration for RL Agents in Text Environments was accepted at NAACL (findings) 2024. |

|---|---|

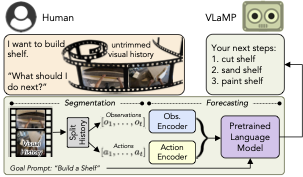

| Aug 1, 2023 | My work on Pre-trained language models for Visual Planning for Human Assistance, done as a research intern at Meta Reality Labs., has been accepted at ICCV 2023. |

| Sep 7, 2022 | Super excited to start my internship at Meta Reality Labs! |

| Apr 25, 2022 | Excited to present our work on multi-label classification using box embeddings at ICLR 2022! |

| Nov 1, 2020 | Happy to announce that I will be starting my Ph.D. in Spring (January) 2021 at UMass Amherst with Prof. Andrew McCallum as my advisor. |

mentors and collaborators

I have been fortunate to work have worked with many amazing people over the years. Here is a list of my current and previous collaborators.Michael Boratko [Google] (2019 - 2025)

Tahira Naseem [IBM] (2023 - 2023)

Akash Srivastava [MIT-IBM Research] (2023 - 2023)

Keerthiram Murugesan [IBM] (2022 - 2023)

Kenneth Clarkson [IBM] (2023 - 2023)

Kartik Talamadupula [IBM] (2019 - 2019)

Pavan Kapanipathi [IBM] (2019 - 2019)

Jay-Yoon Lee [Seoul National University] (2020 - 2022)

Partha Talukdar [IISc Bangalore/Google Research] (2018 - 2018)

Sandipan Bandyopadhyay [IIT Madras] (2016 - 2016)

Ruta Desai [Meta AI] (2022 - 2023)

Unnat Jain [Meta AI] (2023 - 2023)