Pretrained Language Models as Visual Planners for Human Assistance

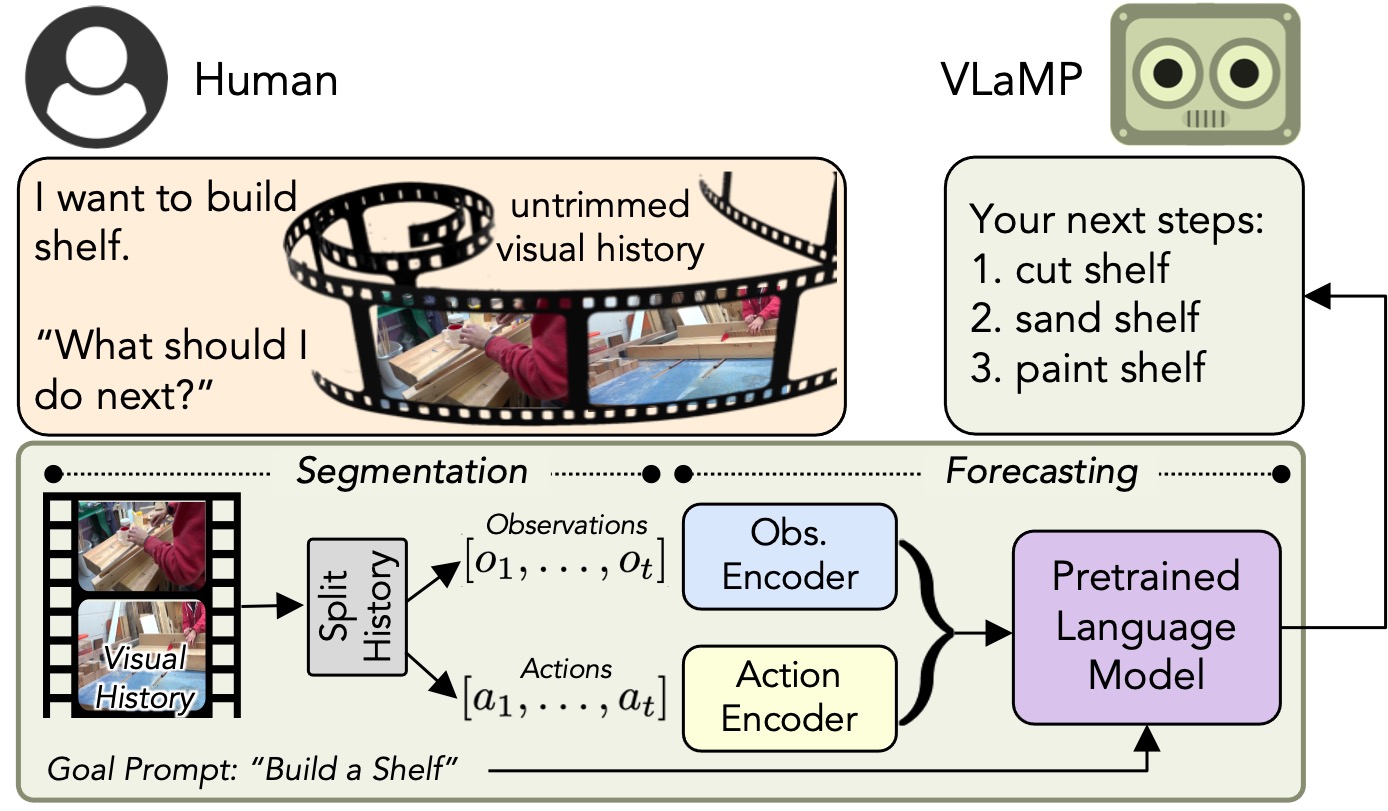

To make progress towards multi-modal AI assistants which can guide users to achieve complex multi-step goals, we propose the task of Visual Planning for Assistance (VPA). Given a goal briefly described in natural language, e.g., “make a shelf”, and a video of the user’s progress so far, the aim of VPA is to obtain a plan, i.e., a sequence of actions such as “sand shelf”, “paint shelf”, etc. to achieve the goal. This requires assessing the user’s progress from the untrimmed video, and relating it to the requirements of underlying goal, i.e., relevance of actions and ordering dependencies amongst them. Consequently, this requires handling long video history, and arbitrarily complex action dependencies. To address these challenges, we decompose VPA into video action segmentation and forecasting. We formulate the forecasting step as a multi-modal sequence modeling problem and present Visual Language Model based Planner (VLaMP), which leverages pre-trained LMs as the sequence model. We demonstrate that \M performs significantly better than baselines w.r.t all metrics that evaluate the generated plan. Moreover, through extensive ablations, we also isolate the value of language pre-training, visual observations, and goal information on the performance. We release our data, model, and code to enable future research on Visual Planning for Assistance.

Cite

@InProceedings{Patel_2023_ICCV,

author = {Patel, Dhruvesh and Eghbalzadeh, Hamid and Kamra, Nitin and Iuzzolino, Michael Louis and Jain, Unnat and Desai, Ruta},

title = {Pretrained Language Models as Visual Planners for Human Assistance},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {15302-15314}

}