Dhruvesh Patel

dhruveshpate@umass.edu

I am currently a fourth-year Computer Science PhD student at UMass Amherst working with Prof. Andrew McCallum alongside some amazing colleagues at the Information Extraction and Synthesis Laboratory. I completed my undergraduate at IIT Madras, where I worked on Robotics research, mentored by Prof. Bandyopadhyay.

Outside of my academic pursuits, I’ve been fortunate to have worked with some amazing collaborators from the industry. I have worked as a research scientist inter at Meta Reality Labs and Abridge AI. Before beginning my master’s program at UMass, I worked for two years as a software engineer at MathWorks. I also dedicated a year to collaborating with Prof. Partha Talukdar on solving various NLP problems in the industry.

CV available at the bottom of this page.

research

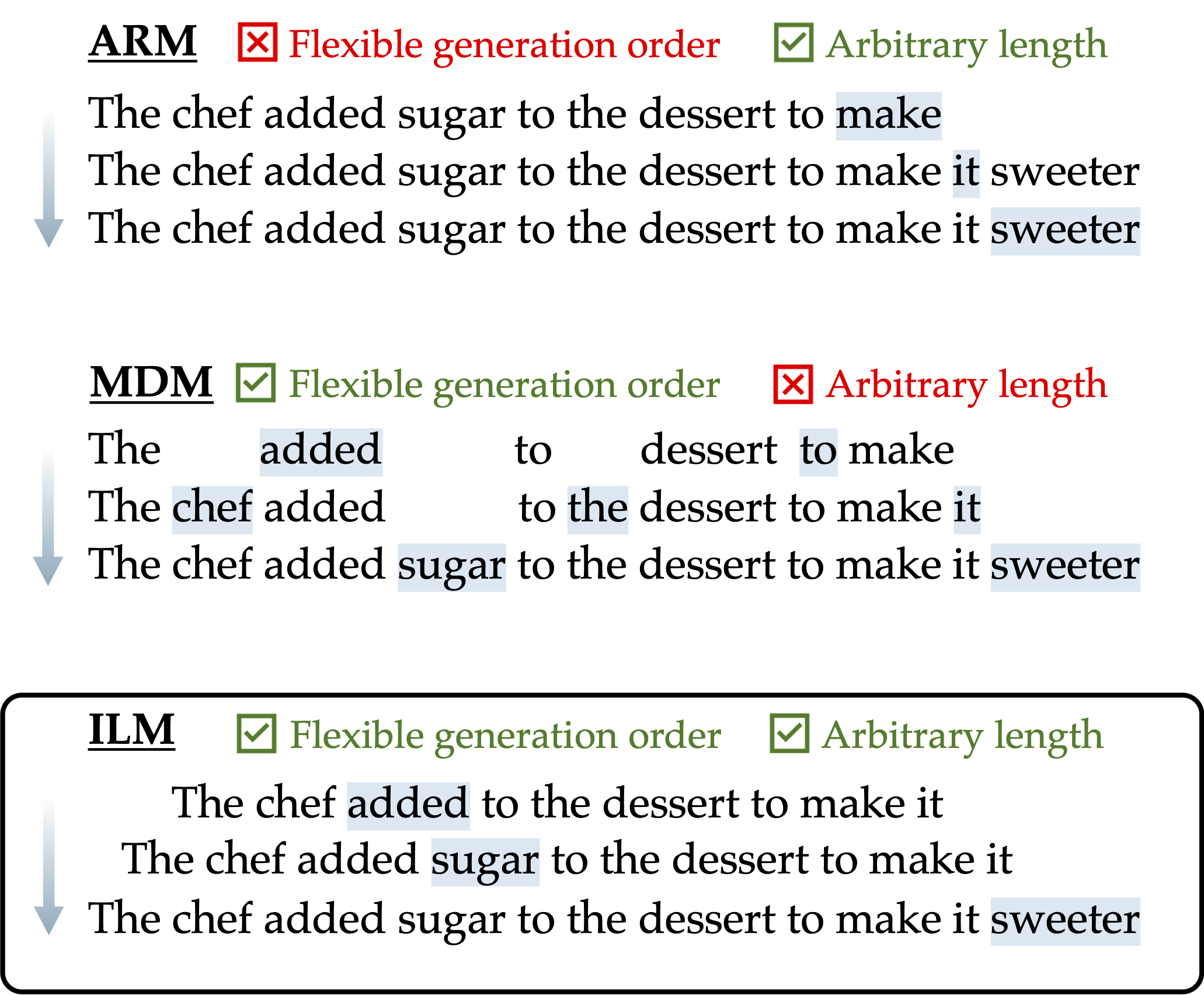

Autoregressive models dominate the scene for generative modeling of non-ordinal discrete data, like text, mostly due to the scalability of pre-training. However, as generative models they have many limitations: limited conditioning and control at inference time, inefficient use of inference time computation by tying the sequence length to the computation, and inability to support non-sequential forms of interaction like edits or deletions. I’m interested in scaling non-autoregessive models like discrete diffusion and flows for text generation either by adapting pre-trained AR models through continued training or by making non-AR pre-training more efficient.

Prior to this, I have worked on non-Euclidean representation learning, energy-based models for discrete data, and compositional generalization in-context learning.

affiliations and internships

news

| Oct 1, 2025 | I will be presenting Improved Sampling from Masked Diffusion Models with Position Contrastive Guidance at the Structured Probabilistic Inference and Generative Models workshop at NeurIPS 2025. |

|---|---|

| Jun 1, 2025 | Work on Insertion Language Models (ILMs) is out on arXiv! It will be presented at the Structured Probabilistic Inference and Generative Models workshop at NeurIPS 2025. |

| Oct 1, 2024 | Learning Representations for Hierarchies with Minimal Support was accepted at NeurIPS 2024! |

| Apr 1, 2024 | Language Guided Exploration for RL Agents in Text Environments was accepted at NAACL (findings) 2024. |

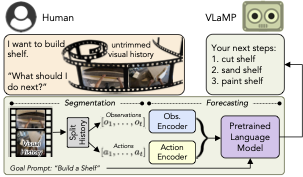

| Aug 1, 2023 | My work on Pre-trained language models for Visual Planning for Human Assistance, done as a research intern at Meta Reality Labs., has been accepted at ICCV 2023. |

mentors and collaborators

I have been fortunate to work have worked with many amazing people over the years. Here is a list of my current and previous collaborators.Michael Boratko [Google] (2019 - 2025)

Tim Rudner [NYU] (2024 - 2025)

Ramon Astudillo [IBM Research] (2025 - 2025)

Tahira Naseem [IBM] (2023 - 2023)

Akash Srivastava [MIT-IBM Research] (2023 - 2023)

Keerthiram Murugesan [IBM] (2022 - 2023)

Kenneth Clarkson [IBM] (2023 - 2023)

Kartik Talamadupula [IBM] (2019 - 2019)

Pavan Kapanipathi [IBM] (2019 - 2019)

Jay-Yoon Lee [Seoul National University] (2020 - 2022)

Partha Talukdar [IISc Bangalore/Google Research] (2018 - 2018)

Sandipan Bandyopadhyay [IIT Madras] (2016 - 2016)

Ruta Desai [Meta AI] (2022 - 2023)

Unnat Jain [Meta AI] (2023 - 2023)